The end result is the same amount of FPUs per TPC, but better segmentation of hardware, which benefits memory allocation. Turing has moved to 2 SMs per TPC and has split the FPUs between them, resulting in a GPU which runs 64 FPUs per streaming multiprocessor, whereas Pascal hosted 128 FPUs per SM, but had 1 SM per TPC instead.Turing unifies the SRAM structures so that software can use one structure for all of the SRAM. For applications which do not use shared memory, that SRAM is wasted and doing nothing. NVIDIA is moving away from the Pascal system of separate shared memory units and L1 units.A new L1 cache and unified memory subsystem will accelerate shader processing.Floating point units ( CUDA cores) often sit idle in Pascal, but NVIDIA is trying to reduce the idle time with great concurrency. Turing has independent datapaths for floating-point math and integer math, and one would block the other when queuing for execution. Integer and floating-point operations can now execute concurrently, whereas Pascal would suffer a pipeline stall when integer operations were calculated.The biggest changes to the core architecture are as follows: NVIDIA is pushing architectural, hardware-side updates and software-side or algorithmic updates, but all of them relate back to Turing.

Turing Changes - Technical OverviewĪnyway, let’s get into the technical details. After this, RTOPS is added (10TFLOPs per gigaray), and nVidia is taking 40% of that, then adding Tensor, then multiplying that by 0.2.Īt first, this formula might sound like a marketing way to inflate the differences between the 2080 Ti and the 1080 Ti, and it probably is, but the math does work. The company’s model also assumes that there are roughly 35 integer operations per 100 FP operations, resulting in a 35% of 80% number to equate a multiplication factor of 0.28 * INT32. For this formula, nVidia is assuming that 20% of time goes into DNN processing and 80% goes into FP32. NVidia tried to find a way to demonstrate its advantages in Turing over Pascal, and so created this formula to weight all of the different performance vectors. There are three kinds of processing going on in Turing: Shading, ray-tracing, and deep-learning. Just to go through the formula, here it is:įP32 * 80% + INT32 * 28% + RTOPS * 40% + TENSOR * 20% = 78 In reality, these cards won’t be anywhere close to 600% different.

By pure numbers, this makes it sound like the 2080 Ti is 6x faster than the 1080 Ti and is misleading, but that’s only true in nVidia’s perfect model workload. In its marketing materials, nVidia claimed differences of 78 Tera RTX OPS on the 2080 Ti, or 11.3 RTX OPS on the 1080 Ti. There are a lot of assumptions in the RTX-OPS formula, and most of them won’t apply to gaming workloads.

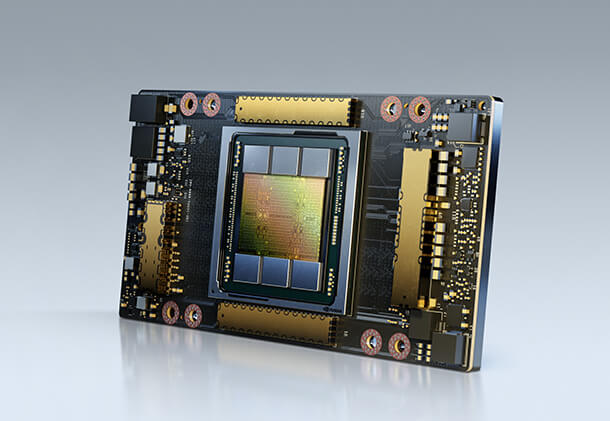

As for how relevant it is to modern games, the answer is “not very.” There are certainly ways in which Turing improves over Pascal, and we’ll cover those, but this perceived 6x difference is not realistic to gaming workloads. NVidia marketing recognized this and created RTX-OPS, wherein it weights traditional floating-point, integer, and tensor performance, alongside deep neural net performance, to try and illustrate where the Turing architecture will stand-out. NVidia invented this as a means to quantify the performance of its tensor cores, as traditional TFLOPS measurements don’t look nearly as impressive when comparing the 20-series versus the 10-series. One of nVidia’s big things for this launch was to discuss cards in terms of “RTX-OPS,” a new metric that the company created. We will also be focusing on how each feature applies to gaming, ignoring some of the adjacent benefits of Turing’s updates. We’ll start with a quick overview of the major changes to Turing versus Pascal, then dive deeper into each aspect. Pascal ArchitectureĪbove: The NVIDIA TU102 block diagram (RTX 2080 Ti block diagram, but with 4 more SMs)

0 kommentar(er)

0 kommentar(er)